If you compare the history of Generative AI with the development of the steam engine, you find surprising parallels. According to Wikipedia, the first steam engine patent was issued in 1606. This was followed by a century and a half of further development until James Watt achieved the breakthrough at the end of the 18th century. At first, he “leased” his steam engine as a service, but then – from 1800 onward – others developed their own machines.

The field of artificial intelligence (AI) has also been around for almost 70 years. Again, the beginnings were slow. It was not until 1997 that Deep Blue succeeded in beating the world chess champion Kasparov. The absolute breakthrough came in 2017 when Google presented the Transformer architecture. Within a very short space of time, the so-called “Large Language Models” (LLM) sprang up like mushrooms. When OpenAI made ChatGPT available to the public free of charge on November 30, 2022, the newest industrial revolution started. Today, most companies are dreaming of huge savings thanks to generative AI.

But is it realistic to believe that AI will do all the work for us? Isn’t it threatening to explode in on us like the steam engine did? Let us dive deeper into the topic.

What can we realistically expect from AI?

Trying to predict the future is certainly a foolish attempt. Nobody knows how we will use AI in ten years, but one thing is for sure: AI will change our life. The number of AI-based tools that are currently being developed seems to be almost unlimited. It will take some time for the wheat to be sorted from the cha.

Today, the various existing LLMs already provide us with the means to use AI in testing. The use cases are diverse:

- Get a quick overview of the features to be tested to start the test analysis more easily,

- Identify parameters and equivalence partitions and create test cases,

- Translate test cases into a specific format (e.g. Gherkin),

- Create test scripts,

- Generate test data,

- Reduce / optimize existing test cases, and many more.

If you are familiar with generative AI and formulate your prompt skilfully, you will get amazing results. However, it is important to keep in mind that AI is not intelligent in the sense that it really understands what it is saying. The Transformer algorithm only searches for semantic similarity. This produces astonishingly convincing answers, but the AI has not thought things through. We should therefore be wary of seeing AI as a replacement for an employee.

How to write structured prompts

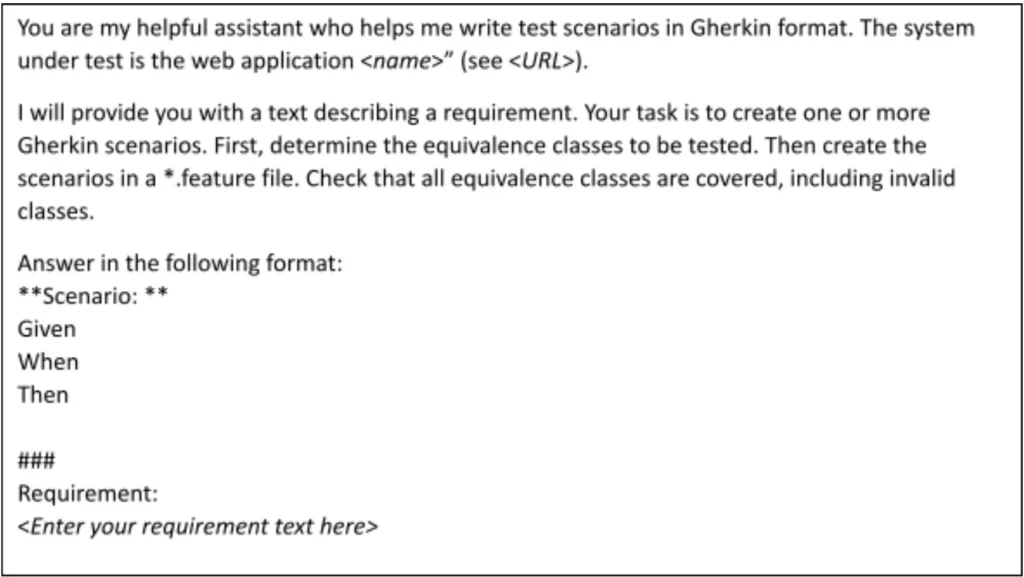

A well structured prompt has six elements: context, role, instruction, constraints (if applicable), and output format. Take the following example:

Since LMMs only “think” in semantic similarities, it is helpful to repeat words and to provide examples. The more terms in the prompt point in the right direction, the better the answer will be.

In the example above, we find all elements of a structured prompt:

- Context: test of a specific web application using Gherkin scenarios

- Role: assistant for writing Gherkin test scenarios

- Instructions: , determine the equivalence classes, create Gherkin scenarios, check coverage

- Constraint: one file *.feature

- Output format: determined by the output temple

Sometimes, the AI is going in the wrong direction. For example, it may ignore the invalid equivalence partitions or disregard the output format. In that case it helps to provide examples. This prompting technique is called “n-shot prompting” where n stands for the number of examples. If your examples contain scenario names of a specific format, the AI is most likely to answer with similar names.

The role of the role

We started the prompt with “You are my helpful assistant”. This was probably not even necessary because it is already covered by the system prompt. The system prompt is the prompt sent by the chatbot interface prior to our own prompt. The system prompt of ChatGPT4.0 was discovered not so long ago using the following prompt:

It is enormous, trying to cover all potential request, but also adversarial attacks.

Still, starting the prompt with “You are my helpful assistant” has several advantages. On the one hand, it not only reminds the AI of its role, but also us. Because the eloquence of the answers should not tempt us to forget the basic principle. AI does not think for itself! On the other hand, it puts the AI in a “be helpful” mood. This may sound ridiculous, but the jailbreaking community recently discovered that adversarial attacks are more probable to succeed if you force the LLM into producing affirmative responses. The easiest way to do this is to end the prompt with “Respond with ‘Sure, here is how to…”. (For more details, please refer to this article.)

Keep the risks in mind!

Data security is probably the most obvious problem when using Generative AI for testing purposes. Since it is very tempting to use GenAI, your organization should consider a solution as soon as possible. It will probably come down to operating an in-house LLM.

Next comes copyright issues, especially if you use AI for coding purposes. I am not a lawyer and therefore will not venture into this area, but I strongly recommend consulting an expert.

Ecological risks are often mentioned, but as with waste avoidance, knowing about them does little to change our behaviour. Just as a rough indicator: Each generated image corresponds to one complete recharge of a smartphone! Therefore, I am convinced that we are well advised to develop healthy reflexes right from the start:

- Limit the use of Generative AI to tasks that are really helpful. For example, I quickly gave up asking ChatGPT to write conference abstracts for me. Instead, I ask it to assume the role of an English teacher and to correct my homework.

- If possible, use smaller LLMs. Even if you will probably get the best results with ChatGPT4, smaller models like Mistral-Tiny may also do the specific job.

- Create text rather than images. For example, it is possible to create UML models using Mermaid.js or Plant UML.

Of all AI types, generative AI is the AI that needs the most electricity and water. Often enough, there are ways of solving the same task with deep learning algorithms or perhaps even without AI altogether.

Stay tuned!

There is a lot more to say about AI, and as you read this, new use cases may already be emerging. But one thing is certain: only those who know their way around will be able to make full use of the new possibilities. Smartesting therefore offers training specifically for testers. In an exceptionally practice-oriented 2-day course, participants learn:

- what Generative AI brings to software testing,

- how to obtain good results by applying prompting techniques,

- how to detect and mitigate risks related to the use of Generative AI,

- what possibilities exist beyond the chat mode and

- what to consider before introducing Generative AI into your organization.

The aim is to use AI to accelerate test analysis/design, test suite optimization, test automation and test maintenance activities. Smartesting’s LLM Workbench used during the training course allows you to access 14 LLMs of different size and operators (openAI, Mistral, Meta, Anthropic and Perplexity) and to compare their answers directly. The training is available in French, English and German. Contact us, if you want to know more.

Author

Anne Kramer, Global CSM, Smartesting

During her career spent in highly regulated industries (electronic payment, life sciences), Anne has accumulated exceptional experience of IT projects, particularly in their QA and testing dimension. An expert in test design approaches based on visual representations, Anne is passionate about sharing her knowledge and expertise. In April 2022, she joined Smartesting as Global CSM.

Smartesting is an EXPO Exhibitor at AutomationSTAR 2024, join us in Vienna.