In the rapidly evolving field of software development, efficient software testing has emerged as a critical component in the quality assurance process. As we navigate through 2023, several prominent trends are shaping the landscape of software testing, with artificial intelligence (AI) taking center stage. We’ll delve into the current state of software testing, focusing on the latest trends, the increasing collaboration with AI, and the most innovative tools.

Test Automation Trends

Being aware of QA trends is critical. By staying up to date on the latest developments and practices in quality assurance, professionals can adapt their approaches to meet evolving industry standards. Based on the World Quality Report by Capgemini & Sogeti, and The State of Testing by PractiTest, popular QA trends currently include:

- Test Automation: Increasing adoption for efficient and comprehensive testing.

- Shift-Left and Shift-Right Testing: Early testing and testing in production environments for improved quality.

- Agile and DevOps Practices: Integrating testing in Agile workflows and embracing DevOps principles.

- AI and Machine Learning: Utilizing AI/ML for intelligent test automation and predictive analytics.

- Continuous Testing: Seamless and comprehensive testing throughout the software delivery process.

- Cloud-Based Testing: Leveraging cloud computing for scalable and cost-effective testing environments.

- Robotic Process Automation (RPA): Automating repetitive testing tasks and processes to enhance efficiency and accuracy.

QA and AI Collaboration

It’s no secret that AI is transforming our lives, and ChatGPT’s collaboration can automate a substantial portion of QA routines. We’ve compiled a list of helpful prompts to streamline your testing process and save time.

Test Case Generation

Here are some prompts to assist in generating test cases using AI:

“Generate test cases for {function_name} considering all possible input scenarios.”

“Create a set of boundary test cases for {module_name} to validate edge cases.”

“Design test cases to verify the integration of {component_A} and {component_B}.”

“Construct test cases for {feature_name} to validate its response under different conditions.”

“Produce test cases to assess the performance of {API_name} with varying loads.”

“Develop test cases to check the error handling and exceptions in {class_name}.”

Feel free to modify these prompts to better suit your specific testing requirements.

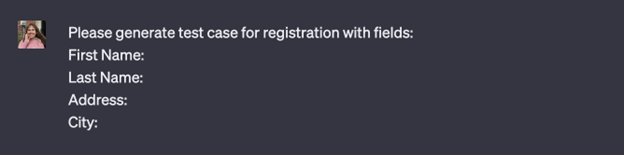

Example

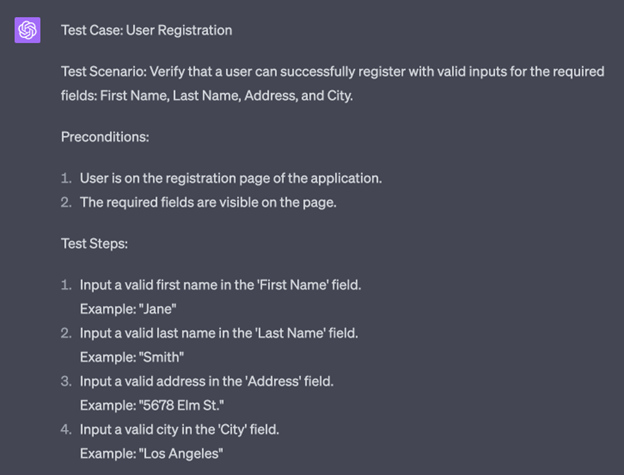

We asked for a test case to be generated for a registration process with specific fields: First Name, Last Name, Address, and City.

AI provided a test case named “User Registration” for the scenario where a user attempts to register with valid inputs for the required fields. The test case includes preconditions, test steps, test data, and the expected result.

Test Code Generation

In the same way, you can create automated tests for web pages and their test scenarios.

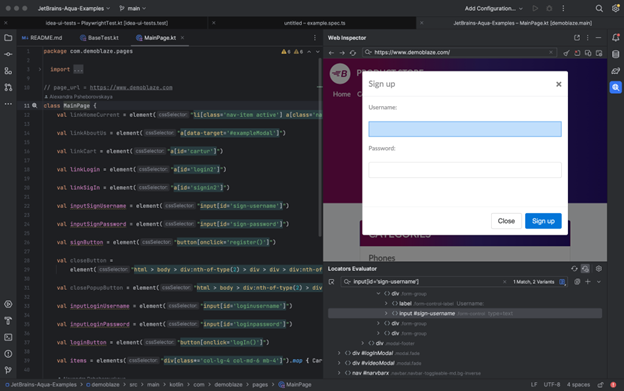

To enhance the relevance of the generated code, it is important to leverage your expertise in test automation. We recommend studying the tutorial and using appropriate tools, such as JetBrains Aqua, to write your tests that provide tangible examples of automatically generating UI tests for web pages.

Progressive Tools

Using advanced tools for test automation is essential because they enhance efficiency by streamlining the testing process and providing features like test code generation and code insights. These tools also promote scalability, allowing for the management and execution of many tests as complex software systems grow.

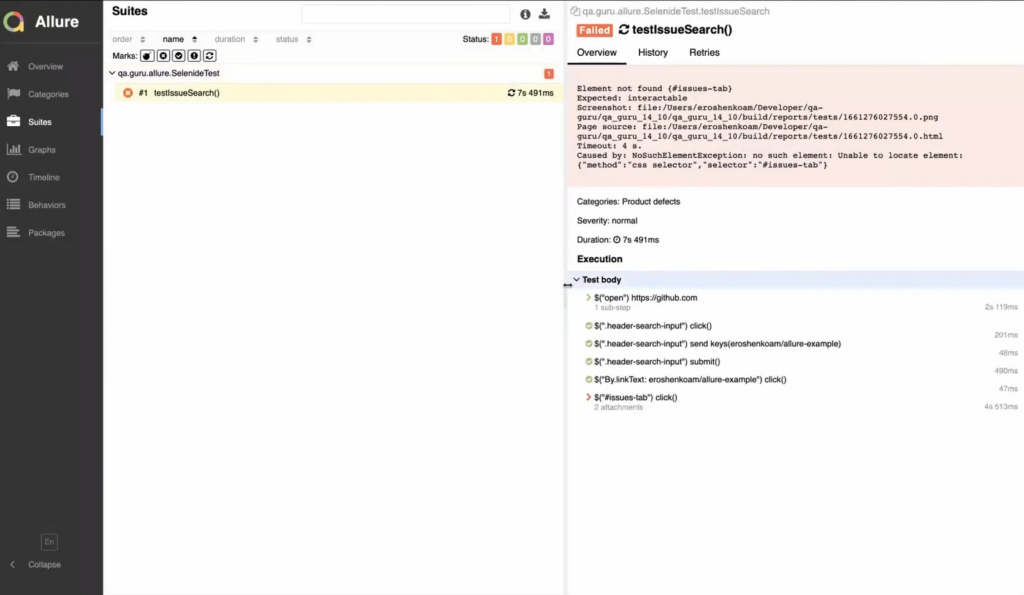

UI Test Automation

To efficiently explore a web page and identify available locators:

Open the desired page.

iInteract with the web elements by clicking on them.

Add the generated code to your Page Object.

This approach allows for a systematic and effective way of discovering and incorporating locators into your test automation framework.

Code Insights

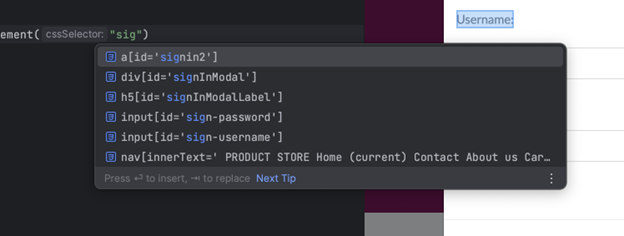

To efficiently search for available locators based on substrings or attributes, you can leverage autocompletion functionality provided by the JetBrains Aqua IDE or plugin.

In cases where you don’t remember the location to which a locator leads, you can navigate seamlessly between the web element and the corresponding source code. This allows you to quickly locate and understand the context of the locator, making it easier to maintain and modify your test automation scripts. This flexibility facilitates efficient troubleshooting and enhances the overall development experience.

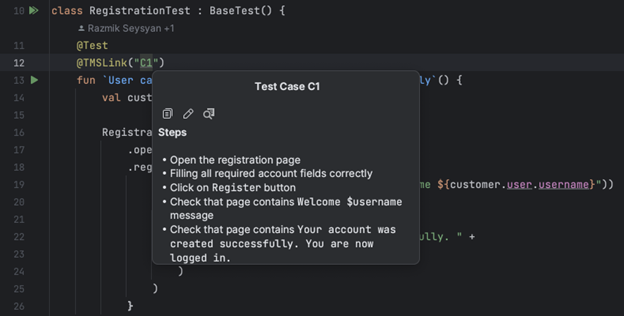

Test Case As A Code

The Test Case As A Code approach is valuable for integrating manual testing and test automation. Creating test cases alongside the code enables close collaboration between manual testers and automation engineers. New test cases can be easily attached to their corresponding automation tests and removed once automated. Synchronization between manual and automated tests to ensure consistency and accuracy is a challenge that does not need to be addressed. Additionally, leveraging version control systems (VCS) offers additional benefits such as versioning, collaboration, and traceability, enhancing the overall test development process.

Stay Tuned

The industry’s rapid development is exciting, and we are proud to be a part of this growth. We have created JetBrains Aqua, an IDE specifically designed for test automation. With Aqua, we aim to provide a cutting-edge solution that empowers testers and QA professionals. Stay tuned for more updates as we continue to innovate and contribute to the dynamic test automation field!

Author

Alexandra Psheborovskaya, (Alex Pshe)

Alexandra works as a SDET and a Product Manager on the Aqua team at JetBrains. She shares her knowledge with others by mentoring QA colleagues, such as in Women In Tech programs, supporting women in testing as a Women Techmakers Ambassador, hosting a quality podcast, and speaking at professional conferences.

JetBrains is an EXPO Gold partner at AutomationSTAR 2023, join us in Berlin