In long-lived software, the majority of errors originate in areas of the code that were recently modified. Even with systematic testing processes, a significant portion of such changes often gets shipped to production entirely untested, leading to a much higher probability of field errors. Test Gap Analysis (TGA) is designed to identify code changes that have not been tested, enabling you to systematically reduce this risk.

Scientific background

The efficacy of TGA is supported by scientific studies. One such study on a business information system comprising approximately 340,000 lines of C# code, conducted over 14 months, revealed that about half of all code changes went into production untested, even with a systematically planned and executed test process. Critically, the error probability in this changed, untested code was five times higher than in unchanged code (and also higher than in changed and tested code).

This research, along with comparable analyses conducted in various systems, programming languages, and companies, underscores the challenge of reliably testing changed code in large systems. The core problem is not a lack of tester discipline or effort, but rather the inherent difficulty in identifying all relevant changes without appropriate analytical tools.

How does TGA work?

TGA integrates both static and dynamic analyses to reveal untested code changes. The process involves several key steps:

- Static Analysis: TGA begins by comparing the current source code of the system under test with the source code at a baseline, e.g., the last release. This identifies newly developed or modified code. The analysis filters out refactorings (e.g., changes in documentation, renaming of methods or code reorganization), which do not alter system behavior, thereby focusing attention on changes that could introduce errors.

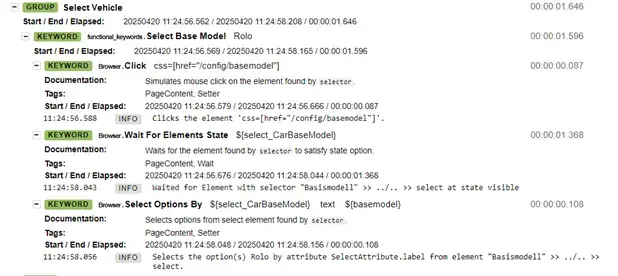

- Dynamic Analysis: Concurrently, TGA collects test coverage data from all executed tests, including both automated and manual test cases. This provides information on which code has been executed during testing.

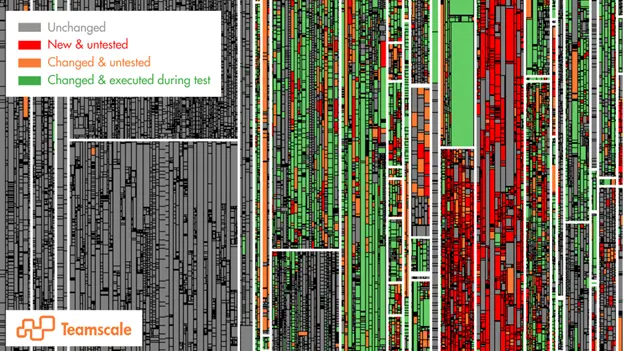

- Combination and Visualization: The results from both analyses are then combined to highlight the “Test Gaps” – those code areas that were changed or newly implemented but were not executed during testing. These results are typically visualized using treemaps, where rectangles represent methods or functions (sized proportionally to the amount of code inside them).

On these treemaps:

- Gray represents methods that have not been changed since the last release.

- Green indicates methods that were changed (or newly written) and were executed during testing.

- Orange (and red) signifies methods that were changed (or newly written) but were not executed in any test, highlighting the critical “gaps” where most field errors are likely to occur.

Impact

TGA is highly effective when applied continuously, e.g., to provide feedback to developers on pull/merge requests, to product owners on tickets, or the test managers on dedicated dashboards – enabling informed decisions about additional testing needs. It significantly reduces the amount of untested changes that get shipped, and has shown to reduce field errors by 50%.

Learn more about TGA and other test optimizations in our free online deep dives!

Author

Dr. Sven Amann

Sven is developer and software-quality consultant at CQSE GmbH. He studied computer science at TU Darmstadt and PUC de Rio de Janeiro and did his PhD in software engineering at TU Darmstadt. Sven is a requested (keynote) speaker on software quality topics at conferences, meetups, companies and universities around the world, drawing inspiration from his vast project experience working with CQSE’s many customers across all industries.

CQSE are exhibitors in this years’ AutomationSTAR Conference EXPO. Join us in Amsterdam 10-11 November 2025.